An introduction to Google Webmaster Tools and Bing Webmaster Center

Last updated on by cjh

As a web site owner, being listed in Google is perhaps the best way for people to find you and what you’re offering, with Bing listings also being of great benefit. SEO (Search Engine Optimisation) is an all-important factor to helping you compete with other web sites for those prized high-ranking spots, and while these two search engines will generally do their own thing when scanning and indexing your web pages, sometimes it can be helpful to know what they are seeing when they index your pages.

Google started off by offering Google Webmaster Tools, a service that allows you, the site owner, to see information that Google hold about your site, and statistics related to your content. Bing’s more recent (and perhaps less feature-filled) Webmaster Center, aims to offer similar informative opportunities.

Being able to see how Googlebot and Bingbot see your web site can help you to better your chances at a more accurate listing position if your site is currently unfriendly towards them. After all, if the search bots can’t see what your web site content is all about, it is a difficult task for it to be indexed and ranked accordingly.

This article gives an introduction to some of the features each service offers.

To access your site information that Google and Bing have, you’ll need to sign-up for an account from each service, which you’ll already have if you use the likes of Gmail, YouTube, Picasa, etc (a Google Account) or Hotmail, Live Mail, SkyDrive, etc (a Bing / Live Account).

Each service offers a home screen that lists the web sites you have requested search engine data access to, as you are not limited to a single web site. This is where you can select to view each site you have added individually, or be shown that you have yet to verify ownership of the domain name.

There is also a section for messages from Google and Bing, which is where Google will tell you if it has detected viruses or other nasty software hosted on your web site.

Before Google or Bing will present you with information about your web site, you must prove to them that the web site in question is in fact yours (or that you’ve been given permission to access site data). To do this, you are asked to verify site ownership with Bing and Google by highlighting that you have access to the web site.

The verification process is similar for both Google and Bing. Both offer the choice of either uploading a custom HTML / XML file to your web sites root directory, or by allowing you to add a custom <meta> element to your home page. Once you’ve added your web site via the Bing / Google home screen, you’ll be taken to the verification process. Remember to pick one or the other; don’t try to do both, as this isn’t necessary.

Bing will offer you the XML file “BingSiteAuth.xml”, whereas Google will offer you the HTML file “googleZ26Y25X24.html”. It is likely that the Bing filename will be exactly as listed, whereas the Google filename will be unique to each web site or account holder. In any case, both files must be uploaded to your web sites root directory, so that they are accessible online via example.com/BingSiteAuth.xml and example.com/googleZ26Y25X24.html

A second option is to upload a custom <meta> HTML element to your home page, which Google and Bing can scan. The <meta> element will be unique for each web site / account owner, and must be placed between the opening <head> and closing </head> HTML elements of the home page, for example:

<html> <head> <title>My Home Page - Welcome</title> <meta name="google-site-verification" content="Z26Y25X24" /> <meta name="msvalidate.01" content="A1B2C3D4E5" /> <style>@import "stylesheet.css";</style> <script type="text/javascript" src="scripts.js"></script> </head>

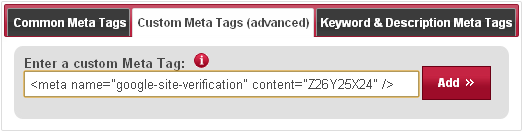

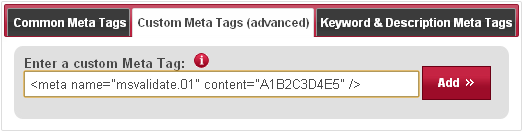

This will be the option you must use if you’re a Freeola InstantPro Website Builder customer. Simply log in to your InstantPro account, and find the “Webmaster Tools” section, look for the “Site Meta Tags” section and select “Manage Meta Tags”. Here, you can add a custom meta tag, which Google and Bing will provide for you. You must do this one at a time:

Don't forget, once you've added the code above, you also need to "enable" it from the same page.

Once you’ve completed one of the verification options above, you’ll need to select “verify” within Google and / or Bing to action the process, which is usually completed in a matter of seconds. By asking you to upload content to your web site that Google and Bing have assigned, they can see that you have full access to the web site.

Google and Bing also offer you the option of verifying your web site ownership via your web sites DNS records, while Google also allows you to verify via your Google Analytics account, if you have one.

If you wish to give others access to your web sites search data, they can add your web site to their account, and you’ll have to add the verification file / code to your web site on their behalf. Be careful though, as they can also make use of the remove url features.

With the verification processes completed, you can now freely view data that Google and Bing have compiled about your web site.

Both Google and Bing use a dashboard to link you to the different sections of information they offer, while also giving you a summary of some of the data they have about your web site. Google give a search query, crawl error, keyword, links and sitemaps summary, while Bing offers a traffic, index, crawl and error summary. Clicking on each of the summaries will take you to the respective detailed pages of each section.

One of the main benefits of the Google and Bing services is being able to see where the search bots are struggling to crawl your web pages. The “Crawl Errors / Details” section lists any URLs that the search bots struggled to access, and the possible reasons why.

It is good to know why this might be happening, and often it’s down to a 404 error because the bots are trying to crawl a page that doesn’t exist. Looking at this data you might see that you’ve misspelled a URL hyperlink from other web pages within your site (which should be an easy fix), or that someone is linking to a page incorrectly.

If this is happening, you could try and contact the webmaster and ask them to update the link they have, or alternatively set up a 301 redirect from the incorrect web address to the correct one. Not only does this benefit your search results, but also your potential site visitors.

If you have an XML sitemap file, you can add them to Bing and Google. Bing currently only allows you to know that you’ve submitted one and when it was last downloaded, whereas Google tells you when it was last downloaded, how many of the listed URLs are currently being indexed, and offers you the chance to resubmit if you’ve updated it since its last download. If you have a low URL list to index ratio, it might be a good idea to have a look at your web pages to see if there is anything about them that might be obvious as to their lack of indexing, such as incorrectly written URLs, minimal / lack of unique content, or major errors in your HTML code.

Google offer a feature that enables you to see a raw version of how it sees each web page, allowing you to see if there is something wrong with how you serve your pages.

This will show you the page content (HTML and all) and HTTP headers that each pages serves up, as Googlebot sees it, and shouldn’t be any different to that served to users. However, using this feature might uncover some bugs in your server-side scripting language (PHP, Perl, etc) that you use to generate your pages.

Remember, if you’re serving a different version of your pages to search engines and users, either accidentally or deliberately, you’ll likely find pages being excluded from the index. If you’ve downloaded any third-party scripts to use on your page, check to see if they are affecting what Googlebot is seeing.

Or perhaps you have a mobile version of your site, and you might have tried to legitimately target the mobile version of Googlebot for your mobile site, wanting to exclude some content that isn’t mobile-friendly, but got this the wrong way around, with the main Googlebot getting your mobile content, and Googlebot Mobile getting the desktop version of your site.

Knowing which search queries are being used to find your pages can be very interesting, but more useful can be seeing how these search queries convert into site visitors. Both Google and Bing allow you to see search queries that people have used which resulted in one of your pages being listed, and how those impressions compare against actual clicks.

If your pages are showing hundreds, maybe thousands of impressions, yet only a minimal of click-through rates exist, it might be time to look at each of those pages and see how you might be able to alter the title and description to entice more people to click through to your page, rather than the site above or below your listing.

You might have a web page about cupcake recipes, and someone might have searched for “cupcake recipes”, resulting in your most relevant page being listed alongside others. However, you might be getting very few visitors because your web site title is listed as “recipes home”, whereas the other pages your competing with might have a more enticing title of “Tasty cupcake recipes for any occasion”.

Getting listed in search results is one thing, getting people to click your link is quite another, so be sure to consider each page title, and the description that’s included, as these will be what the user see, and hopefully convince them to click your link. Being able to see which pages have low click rates can help you target poor scoring pages quickly.

Google Webmaster Tools will show you the most common keywords it associates with your web site, and the significance to your site that it considers each keyword to be. This information can help you to ensure that Google is seeing your web site for what it is really about. Having a low keyword score for words that are highly relevant to your content isn’t going to get you very far.

Freeola, for example, is an ISP who offers, amongst other things, broadband and web hosting. Using the keywords tool, Freeola can see if Google has highlight keywords that are associated with such a service. If the keywords “broadband” and “hosting” aren’t showing up as common keywords within Google Webmaster Tools, that is a clear indication that the content of the broadband and web hosting sections could be fine-tuned to include a greater reference to the product.

Page titles, headings, and even the web address itself are all vital areas in which keywords referencing what the page is about should appear, but also consider the page content. Something as simple as a line of text that currently reads “connect to our service now for only £10” could be changed to “connect to our broadband service now for only £10”.

Don’t allow yourself to be frightened into thinking that working to ensure your most relevant keywords are included in your content will be considered SPAM. Search engines need to have reference points to look for with each page they scan, and the whole concept of the “keyword” is that they are words that are key to the pages content.

Sometimes links to your pages contain query string data, in the form of a question mark at the end of the URL, and usually followed by a key / value pair dataset. Some of these might be legitimately used by you, as many web sites use this method to have scripting languages (such as PHP) generate pages based on query string data, with the site being powered by a single script, meaning you’re using URLs such as:

example.com?page=home

example.com?page=contact

example.com?page=news

... and that is fine, search engines have no issue with that.

Problems can occur, however, with stray query string data that is being included within links to your site that you’d rather not be included, such as:

example.com?refercode=abc123

example.com?sort=price

example.com?sort=stock

As in the example above, the refercode query string key could have any number of different values, thus making Bing and Google think they are all different web pages, when if fact this isn’t the case, and a sort code will usually just be reordering the same content.

By using URL parameters (Google) and URL Normalisation (Bing), you can tell each crawler about certain query string keys that you’d like to be ignored. Google go one step further with their URL parameters option by allowing you to select how the parameter affects the page content.

Do be careful with this feature though, as if you made the wrong selections and exclude the wrong query data, you could be doing your site quite a disservice.

Google Webmaster Tools offer HTML suggestions for your pages. These suggestions are aimed at fishing out duplicate meta descriptions and page title that might currently exist on some of your pages, which can be easily done, but can potentially dilute some of your pages index ability. If 20 of your pages have the same title and description, Google might struggle to distinguish one of those pages from the other.

Google may also suggest that some of your pages titles and meta descriptions are too long or to short, again meaning Google might struggle to correctly index those pages.

Google Webmaster Tools have many other features to offer you, and Bing Webmaster Center is growing to be an informative service as well. If you sign-up to each service, which is recommended, be sure to have a browse of all the available sections to see if they are of any help to you.

Yahoo is another well known search engine, and they did used to have a similar service known as Yahoo Site Explorer. However, Yahoo has for a while been transitioning their organic search results pages to be powered by Bing, rather than using their own search database. This is now completed, and as a result, the Yahoo Site Explorer service is no longer available

Live Chat is offline

Live Chat is available:

9:30am to 5:30pm Monday to Friday (excluding bank holidays).

It appears you are using an old browser, as such, some parts of the Freeola and Getdotted site will not work as intended. Using the latest version of your browser, or another browser such as Google Chrome, Mozilla Firefox, Edge, or Opera will provide a better, safer browsing experience for you.